Hi all,

-

We are running a self-hosted Temporal ( v1.27.1) deployment on Kubernetes, using Cassandra v5.0.2 as the persistence store. All namespaces are currently configured with a 30-day retention period.

-

We’ve noticed that even with moderate workflow activity in some namespaces, the disk usage continues to increase steadily — with the

history_nodetable accounting for the majority of the storage footprint.

Findings So Far

-

The

history_nodetable is the largest contributor to disk usage across Temporal DB tables (confirmed via Cassandra monitoring). -

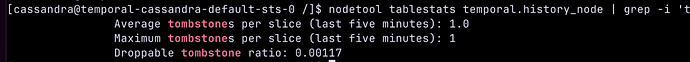

Tombstone metrics for both

history_nodeandexecutionstables are well within acceptable limits. No compaction issues were observed. -

The

gc_grace_secondsis set to the default value of864000seconds (10 days), aligned with Cassandra best practices. -

We’ve verified that event history sizes are relatively small for completed workflows — nothing in the multi-MB range.

-

WorkflowExecutionRetentionTtl is confirmed to be 30 days for all namespaces.

-

Purging completed workflows is not feasible for us, as our frontend applications rely on workflow metadata for status display and analytics.

Ask

We would appreciate guidance on the following:

-

Are there any recommended best practices for managing storage in Cassandra without purging workflows (due to downstream dependencies on historical metadata)?

-

Is there any Temporal server-level configuration (other than namespace-level retention) that could help limit or manage storage growth more effectively in Cassandra?

Related Thread

- We came across this related community post, which closely resembles our case:

- Cassandra history_node table keeps growing

- However, we’re still unclear on what safe and practical next steps we can take without compromising system behavior or user-facing analytics.