Hello Team,

We are seeing memory leakage and as well CPU hitting 100% gradually on our frontend service deployed in k8s. We are using temporal server version of v1.14.4 with JWT based authentication and server side TLS setup enabled.

Because of this issue we noticed most of calls to temporal server are failing with context deadline exceeded error as frontend is completely chocked to handle more requests. Things become normal for sometime after we restart frontend service but then gradually it again hits 100% cpu and memory as clients reconnect.

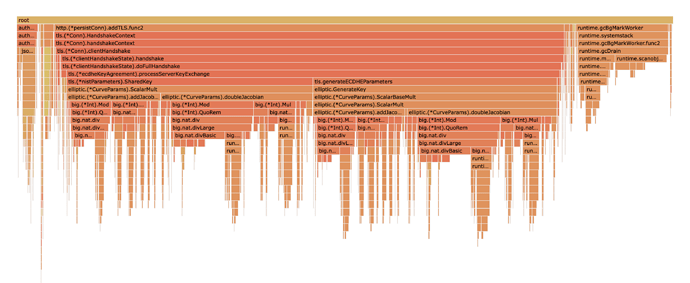

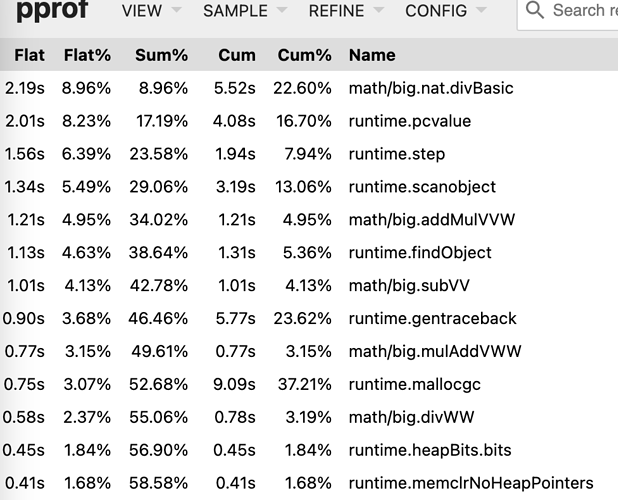

To investigate this we enabled profiling in server using runtime/pprof package. We could see most of the CPU time and as well as memory is utilised by TLS handshakes and increases each time the TLS client connects. We assume that it allocates memory each time client connects and GC does not collect the allocated memory on math/big.nat thereby hitting max memory.

We could also see below entries in our logs. This is a call to retrieve Azure AD public keys for verifying incoming JWT tokens

{“level”:“error”,“ts”:“2022-03-22T12:16:47.843Z”,“msg”:"error while refreshing token keys: “: net/http: TLS handshake timeout”,“logging-call-at”:“default_token_key_provider.go:123”,“stacktrace”:“go.temporal.io/server/common/log.(*zapLogger).Error\n\t/go/pkg/mod/go.temporal.io/server@v1.14.4/common/log/zap_logger.go:142\ngo.temporal.io/server/common/authorization.(*defaultTokenKeyProvider).timerCallback\n\t/go/pkg/mod/go.temporal.io/server@v1.14.4/common/authorization/default_token_key_provider.go:123”}

As stated earlier, we are using v1.14.4 version with JWT & TLS enabled.

Did you encounter this issue? Is this issue addressed in newer releases? If not, could you please let us know how we can fix this?

Please find attached screenshots for your reference. Do let me know if you need more details.

Thanks,

Dhanraj