Hello everyone,

We need help understanding the root cause of an error we observed.

The workflowId mentioned in the attached log was already terminated via the UI on 2025-09-01 (we had to terminate a batch of workflows because our self-hosted Temporal server CPU utilization reached 100%). However, on 2025-09-02, we still saw a log entry with the error:

“workflow execution already completed”

Could someone explain why this might occur? Is it expected behavior, or could this be due to a data synchronization issue between Temporal components?

FYR,

1 Like

Hi,

I think this is expected, note that the log level is info

When a workflow is terminated there can be stale tasks (workflow/activity tasks) in the temporal server. Matching tried to dispatch the workflow task of a closed workflow, it call history service to record the started event (RecordWorkflowTaskStarted/RecordActivityTaskStarted) and history responds “workflow execution already completed”, then the matching service drops the tasks.

@antonio.perez,

It’s been two days since we have terminated the workflows,

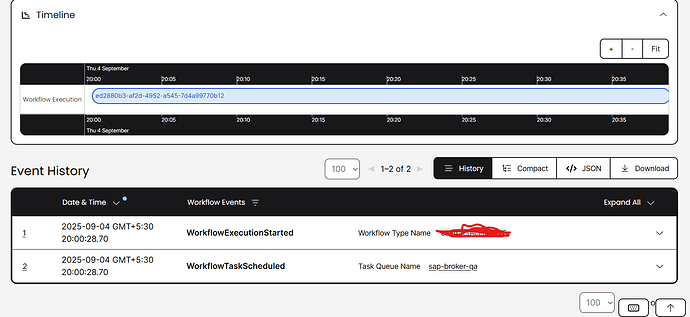

But still we are seeing, Temporal server is trying to some tasks on the terminated workflows.

Here’s one of the log from temporal server, The workflowId which u are seeing below, is terminated on 1st sept itself. are u seeing any abnormality here? our workflows are processing very slow. Workflow Tasks are getting scheduled and nothing is processing after that. Can u please help us out here. We have been struck since 3 days.

@antonio.perez , @maxim

One more critical observation when checked the logs is, There are literally 214k logs in the server, with the same workflow-id and same message “Workflow task not found” and with error “workflow execution already complete” since the workflow termination. (task-queue-id is different)

I’m not sure whats happening. Can u guys please help us out, we are blocked on this since 4 days

Hi,

There are literally 214k logs in the server, with the same workflow-id and same message “Workflow task not found” and with error “workflow execution already complete” since the workflow termination. (task-queue-id is different)

Is it the same log (info printed in matching_engine_go:546). Any error logs?

how many workflows did you terminated?

Can you share the server configuration and version?

Hi @antonio.perez ,

we have terminated around 4k workflows(different workflowId’s, Across all namespaces we terminated around 17k workflows) on the namespace which you are seeing in the log.

Yes all are having the same log.

Today also, i can see the same log, for the same workflowId in the server

These are the temporal server version’s, which we are using

We had the temporal selfhosted on AWS ECS. Both UI and server together are allocated with 2vCPU and 50GB memory.

FYI,

on 30th august, due to unexpected load across multiple namespaces, The CPU utilization spiked to 100 percent. For almost two days workflows which were started were struck at same point & CPU remained the same at 100%. later on 1st, 2nd sept, we terminated all the running workflows. The CPU utilization has reached normal. But there was/is a lot of slowness in the workflow processing.

When checked the logs, I have observed the behavior which i mentioned in this thread..

Please help us in finding the root cause for this issue.

@antonio.perez, can u please help us out here.

Thanks.

@maxim @antonio.perez

Can u please help us out. thanks.